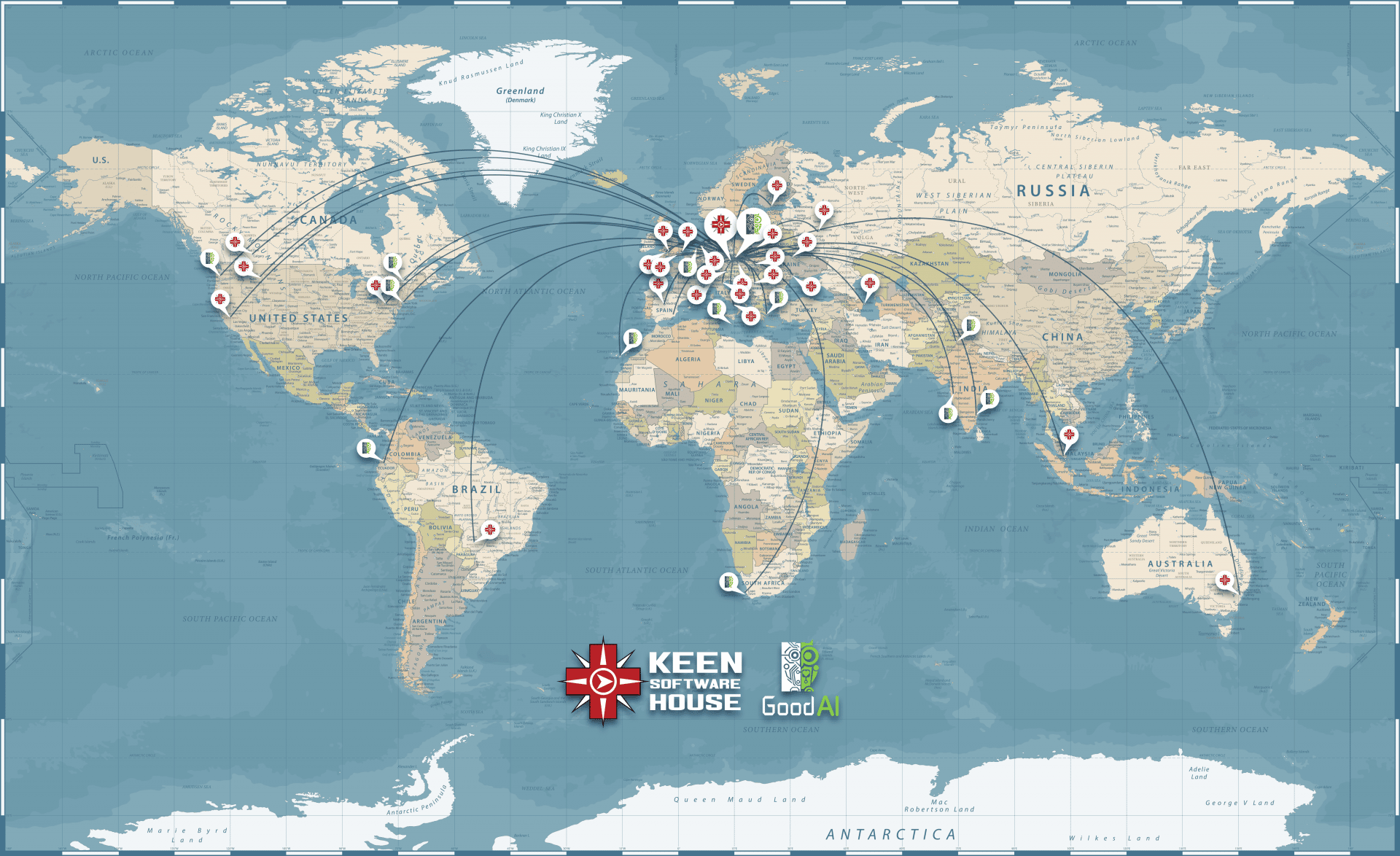

GoodAI was founded in 2014 with a $10M personal investment from Marek Rosa. Our long-term goal is to build general artificial intelligence that will automate cognitive processes in science, technology, business, and other fields. We conduct our own research, advocate fundamental AI research at the EU governmental level, and forge a community of like-minded groups through the GoodAI Grants program.

Marek Rosa

Founder and CEO

Marek Rosa, CEO/CTO, founded GoodAI with a personal investment of $10M. He has set the long-term vision for GoodAI and directs the focus of the company, leading both the technical research and business sides. He takes a hands-on approach to our daily research and development as a researcher and programmer himself.