Forbes interview with Marek Rosa, translated from Slovak. The original can be found here.

Why is stopping the development of the OpenAI platform foolish, and why is it unlikely that artificial intelligence will kill us all? Slovak developer, Marek Rosa, who has been planning to launch a world-unique AI for several months, answers these questions.

Rosa, who is behind the Czech companies GoodAI and Keen Software House, is testing the capabilities of general artificial intelligence in an environment close to his heart – computer games.

At the same time, no one in the world has yet published a similar solution, which he plans to release to the world in a few months.

Calls to slow down the AI are silly

Which of your companies currently employs you more? Game studio Keen Software House or development company GoodAI?

Ninety percent of my time is occupied by GoodAI, as Keen Software House is an established company with set projects and vision, with designs that I have already determined.

At GoodAI, on the other hand, we are working on new things that are very dynamic. New things are happening in AI almost every day.

What do you think of the open letter by Elon Musk and other technology leaders calling for a halt to the development of artificial intelligence by OpenAI?

I don’t like the idea of an open letter, and I consider calls to slow down development to be downright foolish. However, I agree that it is necessary to be careful and approach regulation sensibly. But I probably wouldn’t want to exaggerate that either.

We may end up in a situation which I liken to regulation in the development of medicine. Development itself takes a very long time because it is a pre-regulated environment – I would not want to take drugs that have not been tested.

But regulations also have an impact on innovation in the sector. It is why I would not want AI to be extremely regulated because that could cause innovation to grow at a lower rate. What is needed, first of all, is to not overestimate the risks.

What risks are you referring to?

One risk is that AI will put us out of work because it will be able to do my job and yours faster and cheaper. This means that it will be more economical for the company to use AI instead of people.

It may not even be necessary to have business owners. All of this can have brutal effects on society and we need to be prepared for it. On the other hand, the aforementioned six-month postponement will not solve anything.

For the last ten years we have been listening to the hysteria on this which has not improved anything. Rather, I think we need to move forward, train AI, but also pay close attention to testing.

Writing theoretical articles won’t help anything, we need to create a system that works and that will only happen if the training continues.

The second risk is that the AI will kill us all. But that’s as remote and small a probability as a meteor killing us all.

So how to prevent it?

I believe that if autonomous AI systems are going to be made, care must be taken to control what such a system does. Simply so that it doesn’t do something dangerous itself.

This also applies to Good AI, as we are developing an autonomous agent that, although it is placed in a computer game, can inadvertently decide to do something dangerous.

It is not yet a robust system. However, if there were billions of these agents in the world, no one would have a chance to control them. But at the moment, they can’t even learn from their mistakes and experiences.

Unpredictable reactions

How do you control their own autonomy?

Imagine chatting with ChatGPT – in fact, our autonomous agent does a similar thing to ChatGPT. I instruct it to make some sort of plan.

For example, it writes a program using GPT, decides to run it, and if it detects that it doesn’t work, the agent re-enters GPT to rework it. And this can go on and on.

So far it’s pretty slow, much like the ChatGPT bot’s responses when you use it. That said, we do have the ability to see in real time what’s going on and if this agent is accidentally trying to take over the world.

If it ran faster, which it surely will someday, it could be more dangerous without controls. But we are not there yet.

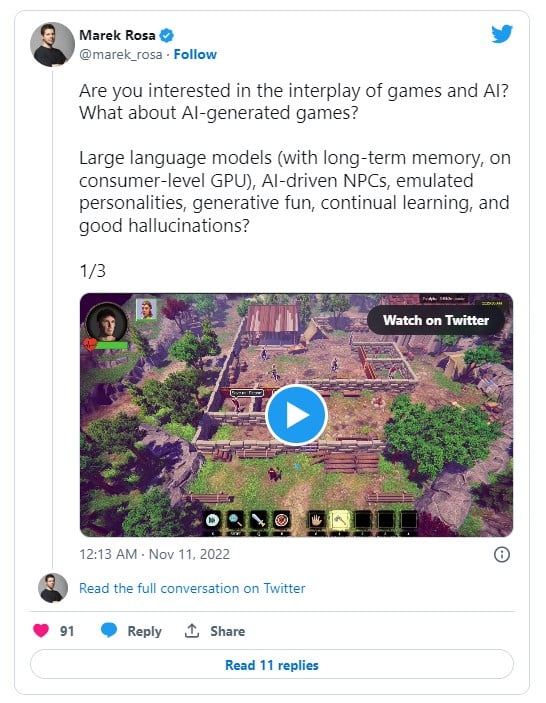

You incorporated these agents into games. Why did you decide on gaming?

We haven’t officially released the game yet, but when we do, we’ll be the first in the world to incorporate something like this into games. These are the so-called NPCs (non-player characters) that are commonly present in games.

Their exact behavior is scripted. For example, attack an enemy.

We’ve been going about it differently: using GPT, we’ve been teaching them what options they have in a given environment, how they should react based on their nature, their memories, and what they should do in a given environment.

GPT emulates their actions for us and we transfer them to the game.

What does it actually look like in the game?

I like to use one scenario as an example: it involves two NPCs and one player. The NPC is married and the player tells the wife that he saw her husband kissing some other woman.

This annoys the character, whose nature is generated by artificial intelligence, and she goes to her husband, starts to argue with him, he scolds her, and after a while her nerves snap and she starts beating him with a frying pan.

And he runs away. What is interesting is that there is nothing scripted about it.

Artificial intelligence tries to emulate these two NPCs, their nature, and how they can interact with each other.

If Iwere to run this scene a second time, it can happen that the husband convinces the wife that he didn’t kiss anyone, that the other player made it up, the wife gets mad at the player and starts beating him with a frying pan.

This kind of NPCs will revolutionize gaming, because until now NPCs have been static. Now it can be more interesting.

How does this relate to GoodAI’s vision?

In the short term, we are focusing on developing general artificial intelligence in a gaming environment where we can test autonomous agents without the risk of destroying the world. It is an environment that is safe.

This means that if these agents don’t work 100%, the consequences are mitigated. It’s not as serious an environment as when talking about autonomous cars.

We have the opportunity to make a commercial product that may not meet the same quality conditions as it would in another environment.

Are you looking to improve gaming experience or test your AI this way?

It goes hand in hand. It detracts a bit from our long-term goal of general AI, because we have to try to maximize it for the fun of our players, for example.

This means we want to artificially dramatize the characters to make them react more extremely. Because in a real-life scenario, if I told a woman that her husband was kissing another woman, she probably wouldn’t immediately react within a few seconds. Rather, it would take longer.

But this is a game, we have to dramatize a bit. Game agents have their own goals, they know how to invent them. For this, they need to have a long-term memory and remember what they’ve seen, what they’ve thought and be able to follow through accordingly.

At the same time, we need them to be able to function in an environment, to be able to obtain inputs from that environment and to be able to influence it. So in this regard, it carries the same elements as general agents or AGI respectively.

The future of programming

What connection does AI have with the current boom around no-code platforms, i.e. platforms that make it easier for non-programmers to create software and are more user-friendly?

It’s a completely different matter. However, I see that no-code people play with using GPT to generate applications, or in some chat they describe what kind of application they want and GPT generates it for them in a language that no-code understands.

However, there must always be, at least at a minimum level, some language that defines the application. I see the future of programming as developers interacting with ChatGPT systems in the background, and they will generate the resulting programs in the background.

My guess is that before there is any widespread no-code platform, programs will be created via GPT. No-code won’t even catch on because it hasn’t had enough time to do so and has been replaced by a technology that is much more convenient and better.

If we return to gaming, the past years have been prosperous for this segment. How do you see it?

Probably fine. But I don’t see any positive or negative impact of Covid. Our sales fluctuate plus or minus 30 percent depending on how many updates we release.

Right now, we’re in a period where we haven’t released an update in a while because the one we’ve been working on turned out to be more complicated than anticipated.

I know there have been problems in the mobile business due to ad blocking, but it doesn’t concern us. Our flagship game Space Engineers has been selling in consistent numbers for the past ten years.

We are looking for an investor

You invested $10 million in GoodAI from the profits of your game studio Keen Software House. Is it enough to run such an ambitious company?

We have already used ten million and I had to put more money into it.

What amount are we talking about?

I don’t have a precise count, but beyond the ten million, I’ve put another two to four million into the company. Development costs us about two million dollars a year.

Two years ago, in our earlier interview, you stated that you were not looking for an investor. So has anything changed?

We weren’t really looking for an investor at the time. But now, because of the autonomous agents, an investor makes sense for us, which is why I’m currently in San Francisco.

Two years ago, we were focused on fundamental research and looking for an architecture that was scalable to general artificial intelligence.

However, thanks to GPT systems, today we can switch to commercialization mode, which was my real goal with GoodAI.

Not just to do basic research and write technical papers for conferences, but also to do something useful that can be sold. As far as investors are concerned, I’m trying to get a feel for the market, see what the opportunities are.

We are looking for investors who can put money into it beyond what I can afford to scale the solution. It should be a higher amount, about 10 – 20 million dollars.

At the same time, we would like to release the game in a couple of months and if it is successful, which I believe it will be, it can be a good revenue source.

So are you planning to release the game this year?

Certainly yes. We’ve got it basically done, we just need to tweak it to make the game work robustly and make fun agent behavior happen more often.

We need to nudge them a little so that they behave more extreme and more often.

Deadline after a decade

Apparently, the ten-year deadline you gave yourself to build General AI is slowly approaching. Will you make it?

The development of agents into our game goes hand in hand with the development of general AI. If we get to it, it will be thanks to GPT systems that we didn’t invent, but came from outside. We will have developed general artificial intelligence on top of that. Our ten-year plan can be achieved because of that.

What do you think will have the biggest impact on gaming or AI in the near future?

For example, it could be virtual reality (VR), which adapts well among gamers. A lot of games are being made for VR and I’m amazed at how well they’re taking off.

In addition, AI can be used to create content in games – such as graphics and models. But I don’t find that interesting, because it will actually just make game development cheaper and put someone out of a job. It won’t bring new value.

It’s a bit more interesting if AI tools can start to be used by graphic designers to improve their productivity. Then even a tiny team can make games of a quality that would otherwise require hundreds of people.

I think it will become the norm. In a few years, a team of three will be able to make a game that looks like Call Of Duty.

The word AI is already being used by many, even smaller startups, and at times it can seem more like a buzzword, as blockchain once was, for example. How to differentiate it?

What makes this different from blockchain and web3 (decentralization of the web, ed. note) is that the aforementioned things were never useful to anyone. It was just hype. If I go to the store and buy bread and hire myself, that bread is useful to me. That is an undeniable fact.

Blockchain, apart from a few cases that are also questionable, has not improved anyone’s life. AI, such as ChatGPT, has already improved the lives of a huge number of people, programmers and the like.

At least that’s where it differs. How to differentiate startups that use AI only as marketing… It’s been a problem for the last few years, when AI constituted only one percent of the whole product, not the key element.

Now that people have some experience with it, it’s easy to determine whether a given AI is interesting. I wouldn’t worry about that, or whether it’s just a buzzword.

We will probably end up in a situation where the startup team will not even have machine learning, but rather programmers who will be able to skillfully use GPT to improve their product.

Leave a comment